Val Swisher has written yet another excellent article that gets to the heart of content. Just today, in fact, I was questioning this very topic, and not understanding why more people don't understand these basics. Read this throughly if you write or manage any content, because this article will help to boil it all down for you and get you in the right (or “write”) mentality. Must, must, MUST read!

–techcommgeekmom

Quality Content is in the Eye of the Consumer

Yesterday, I had the opportunity to speak on a panel at TC Camp. The topic for the panel was “What is Quality Content?” The first thing I noticed is that we have a difficult time defining the term quality when it comes to content. We all have ideas about the characteristics of quality, but we have a difficult time taking a broad view of the term itself. Here is how the Oxford Dictionaries defines quality:

qual·i·ty noun \ˈkwä-lə-tē\

- the standard of something as measured against other things of a similar kind;

- the degree of excellence of something

Joan Lasselle, one of the other panel members, was the first to point out that, as a discipline, technical communications (and marketing communications I might add) has no standard of excellence. I agree completely. We do not have a bar that has been set, a number, a grade, or any set of common standards that we can use for an objective measure of overall quality content.

Our measurements of quality are based largely on a subjective declaration of characteristics that we agree on. For example, Content Science has a Content Quality Checklist that contains a number of attributes, including:

- Does the content meet user needs, goals, and interests?

- Is the content timely and relevant?

- Is the content understandable to customers?

- Is the content organized logically & coherently?

- Is the content correct?

- Do images, video, and audio meet technical standards, so they are clear?

- Does the content use the appropriate techniques to influence or engage customers?

- Does the content execute those techniques effectively?

- Does the content include all of the information customers need or might want about a topic?

- Does the content consistently reflect the editorial or brand voice and attributes?

- Does its tone adjust appropriately to the context—for example, sales versus customer service?

- Does the content have a consistent style?

- Is the content easy to scan or read?

- Is the content in a usable format, including headings, bulleted lists, tables, white space, or similar techniques, as appropriate to the content?

- Can customers find the content when searching using relevant keywords?

This is a nice list of items to keep in mind when you develop content. Unfortunately, when it comes to actually measuring quality, many of these attributes fall short. Many of them are subjective. There is no common measurement that we can use to compare a piece of content against a standard. Let’s look a little more closely at a few.

Meets User Needs / Usability

We all want our content to meet the needs of our consumer and be usable. But how, exactly, can we measure this? Sure we can look at things like the number of support calls compared to…something. Compared to other products that we have developed content for? Compared to what standard? If our content met ALL of the needs of our consumer, they’d never have to call us.

Relevance

We want our content to be relevant. Just what is relevance? Is it the same for each of our content consumers? No. It is not. We’d need to know what each and every person needs in order to be 100% sure that our content is relevant for each one. That is not possible, because we don’t know what each of our consumers believes is relevant. Relevance is subjective.

Accuracy / Meets Technical Standards

Accuracy is one of the attributes that is measurable. The Oxford Dictionaries defines accuracy as “the degree to which the result of a measurement, calculation, or specification conforms to the correct value or a standard.” If we say “The phone is 5 inches long,” we are referring to an objective measurement. It either is or is not 5 inches long. Accuracy deals with facts, not feelings or opinions. The same is true with meeting technical standards. If there are standards, we can measure against them.

Readability

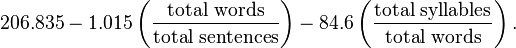

Readability is another measurable attribute. We have scales and measurements that we use to score readability. For example, the Flesch-Kincaid Reading Ease test and the Flesch-Kincaid Reading Level. Here is the formula for the Flesch-Kincaid Reading Ease (FRES) test:

Higher scores indicate content that is easier to read; lower scores indicate content that is more difficult to read. Here is how to interpret the score:

| Score | Meaning |

|---|---|

| 90.0–100.0 | easily understood by an average 11-year-old |

| 60.0–70.0 | easily understood by an average 13- to 15-year-old |

| 0.0–30.0 | best understood by university graduates |

Another test for readability is the Dale-Chall Readability Formula. This measurement uses sentence length and the number of ‘hard’ words to calculate the U.S. grade reading level. There is a list of 3,000 words that are common to children in the fourth grade. You can read all about the Dale-Chall Readability Formula here.

Findability

Findability is an interesting attribute to consider. There are varying degrees of findability. Take the “macro”: Will a search engine (particularly Google) present my content to a consumer when the consumer searches for it? Companies spent boatloads of money on search engine optimization and advertising, trying to get their content to float to the top of the results page. Unfortunately, every time we seem to have figured out Google’s magic potion for raising the ranking of a webpage, they change the algorithm. In fact, the updates to Google’s algorithm happen incredibly frequently. Moz does a great job of tracking all of these changes. That said, to some extent you can measure macro findability based on page ranking. Just don’t expect the results to be the same from day-to-day, particularly using organic search.

Then there is the “micro”: Assuming my consumer was able to locate my content on a bookshelf, in a search engine, on my website, etc., can the consumer find the exact topic that she needs? How good is my index? How good are my cross-references? How good is the navigation within my media? Does my content even contain the information she is looking for? In both the macro and micro cases, we can measure findability in terms of the time it takes to locate a specific piece of information. For example, in the global content strategy workshop that I teach, one of the exercises is to measure the time it takes to find the Walmart.com site for India. It is much longer than you’d expect.

In order to have a good standard, we need a baseline measurement. How long does it “usually” take to find an international website? How long does it usually take to find the instructions for how to change the lightbulb in my GE Profile microwave oven? (The answer is “too long.”) If we have a baseline measurement, then we can measure the findability of any particular piece of information against it. I don’t know of a findability metric that is shared among all content. Here is an interesting article in The Usability Blog on the topic.

Bottom Line

The definition of quality content is a combination of many factors. Some of these factors are objective and can be measured against a standard. Others can be measured, but we need to create a baseline against which to measure them. The rest of the attributes are subjective and squishy. Subjective attributes have no measure. They only have a “feeling” or a “belief.” While feelings and beliefs can be explored and averages can be created, there is no definitive measurement for subjective attributes. What is relevant for me is not necessarily relevant for you. Organization that I deem logical may or may not jive with the way you think.

The number of subjective attributes that we use to define quality content makes it impossible to truly measure how any one piece of content ranks. After all, what are we ranking it against?