Digital Dao

Evolving Hostilities in the Global Cyber Commons

Sunday, November 24, 2013

In OSINT, All Sources Aren't Created Equal

“In evaluating open-source documents, collectors and analysts must be careful to determine the origin of the document and the possibilities of inherent biases contained within the document.”

– FM2-22.3: Human Intelligence Collector Operations, p. I-10

“Source and information evaluation is identified as being a critical element of the analytical process and production of intelligence products. However there is concern that in reality evaluation is being carried out in a cursory fashion involving limited intellectual rigour. Poor evaluation is also thought to be a causal factor in the failure of intelligence.”

– John Joseph and Jeff Corkill “Information Evaluation: How one group of Intelligence Analysts go about the Task”

The field of cyber intelligence is fairly new and fortunately, thanks to the Software Engineering Institute at Carnegie Mellon and the work of Jay McAllister and Troy Townsend, we can take a credible look at the state of the practice of this field:

“Overall, the key findings indicate that organizations use a diverse array of approaches to perform cyber intelligence. They do not adhere to any universal standard for establishing and running a cyber intelligence program, gathering data, or training analysts to interpret the data and communicate findings and performance measures to leadership.”

– McAllister and Townsend, The Cyber Intelligence Tradecraft Project

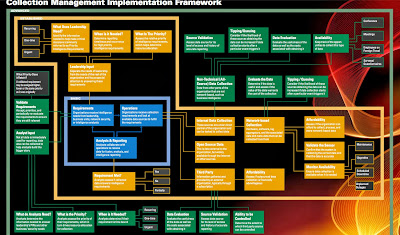

The one thing that isn't covered in their report is the issue of source validation and how that contributes to the validity or value of the intelligence data received. However they did write a follow-up white paper with Troy Mattern entitled “Implementation Framework – Collection Management (.pdf)”

Please take some time to study the framework and read the white paper. It's an ambitious and very thorough approach to helping companies understand how to get the most value from their cyber intelligence products. Unfortunately, while it specifies data evaluation and source validation, it doesn't provide any specific guidelines on how to implement those two processes.

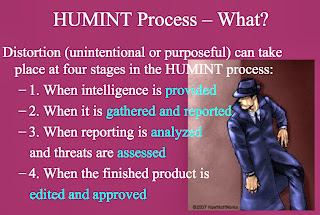

Fortunately, there has been some great work done on source analysis for Human Intelligence (HUMINT) that I believe can be applied to Cyber intelligence and OSINT in general. It's a paper written by Pat Noble, an FBI intel analyst who did his Masters work at Mercyhurst University's Institute for Intelligence Studies: “Diagnosing Distortion In Source Reporting: Lessons For HUMINT Reliability From Other Fields”

A PowerPoint version of Noble's paper is also available. Here are a few of the slides from that presentation:

We recognize these failings when it comes to human intelligence collection but for some reason we don't recognize them or watch for them when it comes to OSINT. The crossover application seems obvious to me and could probably be easily implemented.

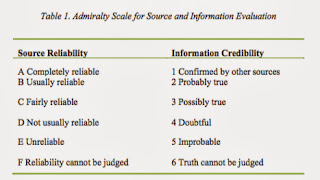

I started this article with a quote from the Army Field Manual FM2-22.3: Human Intelligence Collector Operations (.pdf). Appendix B in that manual contains a Source and Information Reliability Matrix which I think is also applicable to Cyber intelligence or any analytic work that relies upon open sources.

I think a graph like this could be applied with very little customization to sources referenced in cyber intelligence reports or security assessments produced by cyber security companies.

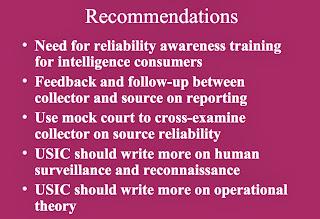

The West Australian Police Force study by John Joseph and Jeff Corkill “Information Evaluation: How one group of Intelligence Analysts go about the Task” recommended the use of the Admiralty Scale which is identical to the Army's matrix shown above:

Again, these scales were developed to evaluate human sources, not published content, but they certainly seem applicable with some minor tweaking.

It's important to note that only part of the problem lies in the lack of source evaluation methods. Another very large contributing problem is the lack of standardized cyber intelligence tradecraft pointed out by McAllister and Townsend in their Cyber Intelligence Tradecraft paper:

“Tradecraft: Many government organizations have adopted the intelligence community standard of consistently caveating threat analysis with estimative language and source validation based on the quality of the sources, reporting history, and independent verification of corroborating sources. Numerous individuals with varying levels of this skillset have transitioned to cyber intelligence roles in industry and academia, but the practice of assessing credibility remains largely absent. The numerous analytical products reviewed for the CITP either did not contain estimative or source validation language, or relied on the third-party intelligence service providing the information to do the necessary credibility assessment.” (p.11)

And of course due to the newness of the field there's no standard yet for Cyber Intelligence training (McAllister and Townsend, p. 13).

IN SUMMARY

There are numerous examples of cyber security reports produced by commercial and government agencies where conclusions were drawn based upon less than hard data, including ones that I or my company wrote. Unless you're working in a scientific laboratory, source material related to cyber threats is rarely 100% reliable. Since no one is above criticism when it comes to this problem, it won't be hard for you to find a report to critique. In fact, it seems like a different information security company is issuing a new report at least once a month if not once a week so feel free to pick one at random and validate the sources using any of the resources that I compiled for this article.

If you know of other source evaluation resources, please reference them in the comments section.

If you're a consumer of cyber intelligence reports or threat intelligence feeds, please ask your vendor how his company validates the data that he's selling you, and then run it through your own validation process using one of the tools provided above.

I'd love to hear from any readers who implement these suggestions and have experiences to share, either in confidence via email or in the comments section below.

UPDATE (11/24/13): A reader just recommended another excellent resource: Army Techniques Publication 2.22-9 “Open Source Intelligence“. It discusses deception bias and content credibility, both of which must be accounted for in source validation.