Robert,

I read your appraisal over several times. Essentially, in my opinion, your understanding of the problems continues to be on the mark and remarkably consistent over the last twenty or so years. Yet your work on both the process and products of intelligence is very high level and in this latest appraisal, as in your previous works, you leave it up to the imagination of the reader to figure out how to actually implement the ideas you so eloquently express. This I think is a mistake in that potential employers, impressed with you macro ideas, would be interested in how these ideas could be brought to the implementation stage. Attached is a supplement to your appraisal on collection and analysis.

In any event I hope that this finds you well and upbeat. You deserve a position that would reflect both your knowledge and your commitment to saving the IC from itself.

A Fan

ROBERT STEELE: The time has indeed come to create an alternative to the existing system. I have started to work with a select group across the emergent M4IS2/OSE network, on a firm geospatial foundation. While many of my ideas have been mis-appropriated and corrupted over the past 20 years, no one has actually attempted to implement the coherent vision — sources, softwares, and services all in one, and this time around, all open source, all multi-everything. The PhD thesis, the School of Future-Oriented Hybrid Governance, and the World Brain Institute — and perhaps even the Open Source Agency as a non-US international body — are the beginning of my final twenty-year run. Intelligence with integrity. Something to contemplate.

Extention of Appraisal Details

Collection

Throughout the Cold War the collection of useful analog telecommunications on the Soviet Union and its Allies was a difficult task. It was possible to use what many called the “vacuum cleaner” on those few signals that were accessible because the amount of data that they carried was quite manageable. The same was true for the collection of international communications mostly in the form of Telex Communications (telegraphic printer). This was under what Jim Bamford has called the “Echelon Program” and it produced considerably more data, but it was still manageable. From the standpoint of the analysts, thanks to early automation, it was still fairly easy to tailor the available information to subjects that fell under existing intelligence requirements.

When the Arpanet was created in the mid-1980, many in the IC saw its potential as a game changer. By mid-1990 Arpanet had transformed into the Internet and the Age of Digital Communications became a reality. Unfortunately the NSA decided that the collection strategy that served it so well during the Cold War would work just as well in the this new age.

To his credit, General Kenneth Minihan (USAF ret.), who was Director of NSA from 1996-1999, saw that this was a strategy for disaster and sought to bring NSA into the digital age and the 21st Century. He failed to achieve this because of active resistance on the part of his subordinate managers from his Deputy Director on down.

As a result when he was replaced by General Mike Hayden (USAF ret,), the director from 1999 to 2005, Hayden repeatedly told both his subordinates and the U.S. Congress that NSA was found to be “drowning in data.” The solution to this obvious problem was originally proposed by General Minihan namely to develop a suite of information management systems that would harness the increasing power of automated processors (i.e. computers) to process and sort the the data as it was collected, store what appeared useful and reject that which was not, organize the useful data into retrievable batches, and make it easily available to language and research analysts. This was the purpose of the failed Project called “Trailblazer” and represents the goal of several subsequent and equally unsuccessful projects.

Now there has always been an under-current of rebels who have argued since the late 1990s that NSA should change its collection strategy to selected targeting using a combination of technical signal characteristics, meta-data characteristics, and key words to acquire useful data upfront. This has been successfully countered by the argument that it would mean something might be missed. As the result the term “targeted collection” means something very different from what these rebels had in mind.

So NSA continues to collect ever increasing amounts of data so that just as in the days of analog analysts can when some crisis de jure suddenly appears (think Benghazi) they frantically search through the mass of data to see if anything relevant can be found. (It has been over thirty years years since I was a language analyst, but my guess is today’s language analysts do the same through their massive stores of voice data.) This mass of processed but largely unevaluated data is the so-called “big data,” at least for NSA.

Analysis

As for desk top information management systems (computers) they are based and have been based for at least thirty years on the use of some very clever retrieval programs that have become increasingly sophisticated. Even ten years ago it was possible to retrieve data and manipulate it in a variety of ways (clustering, hierarchies, etc.). With advent of real data mining, i.e. using algorithms that can detect hidden patterns, automatically change query parameters based on analysis of retrieved data, and so on, it is possible for analysts to do much more than ever before. So in point of fact, analysts in the U.S. Intelligence Community have better tools than ever before to help them transform unevaluated information into real intelligence. So why don’t they?

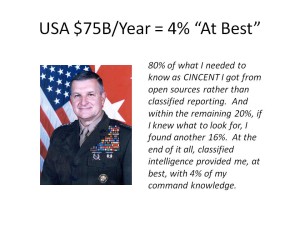

Well first it is a mistake to assume that all IC intelligence analysts perform the same kind of work. They do not. An all-source analyst at CIA or DIA ought to combine the qualities of objective researcher and systems thinker with some level of subject matter expertise to transform often ambiguous, often misleading information into something close to accurate intelligence. That this appears to be no longer be done at CIA or DIA suggests a lack of leadership especially at the working level combined with the lack of a real training program for analysts.

Technical analysis as done by NSA and NGA is more a matter of understanding the technical details of what is collected and then taking very raw information and transforming it into something that can be understood by someone unfamiliar with technical data. This material even when thoroughly analyzed will often be fragmentary and incomplete. At one point NSA and NGA analysts were working closely together combining the information they developed into GIS maps, which could be used by all source analysts to develop so-called finished intelligence. This is evidently still in pilot project mode and not ready for actual replication anywhere else.

The problems that today appear to plague the art and craft of analysis in both the finished intelligence agencies (CIA,DIA) and the technical intelligence agencies (NGA, NSA) appear to be the result of extremely poor senior management, lack of competent technical leadership at the working level, and poor or non-existent systematic analyst training. This in turn has produced a lack of engagement on the part of many intelligence analysts which is the principal cause for “intelligence failures.”

Original Post to Which This Anonymous Fan is Responding:

2014 Robert Steele: Appraisal of Analytic Foundations – Email Provided, Feedback Solicited – UPDATED